What if we could give people and companies the superpower to better see what other people truly mean when they’re chatting with each other. That was our mission about a year ago and we’ve got some wonderful results: we can now read between the lines, well, sort of.

Please note: This article and it’s materials are published here courtesy of Mirabeau, the company I wrote this article for.

Mirabeau always puts communication and exchange of value between people at the core of our projects. You could call this Human Centered Design: creating empathetic products that people are prone to use because they experience some form of value.

From that point of view we found that text messaging and other digital communication is lacking the emotional fidelity we experience when we can see, hear and touch eachother. We kind of haphazardly countered that lack of emotional fidelity by using emoji’s to express the ‘tone’ or ‘intent’ we cannot express without being very verbose.

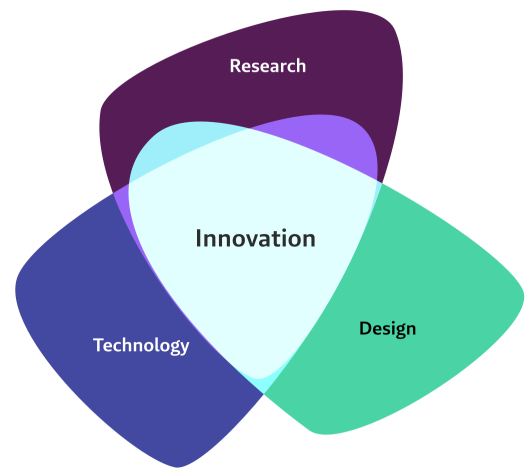

Design, research and tech at the heart of innovation

That’s why we started researching and designing solutions so people can better communicate with the aid of A.I., emoji’s and pattern recognition software. Our researchers, interns and our innovation programme are aimed to design and discover new and empathetic ways to communicate in a better, more human way.

Traditionally Mirabeau combines design, tech and research to power our clients to new heights. That’s why our innovation programme is not just applying new technology, but specifically designing new, appealing, empathetic, smooth and communicative ways to add value into the lives of people. For our innovation programme, design is our engine and technology is our fuel.

Learn to read between the lines.

Derisa Chiu is one of our graduating researchers that helped us discover the meaning and limitations of digital communication. Specifically she pinpointed the possible value of ‘completing’ textual communication with emotion-cues.

Emotion cues are additions to the text in your messenger (Facebook messenger, Whatsapp, etc) like emoji’s, facial expression, gestures, and even usage of specific words, all combining to a emotion-maps. Derisa’s research showed the value of emotion cues for professional health care providers like doctors doing aftercare and precare with their patients via text messages.

What if we could put the melody to the lyrics of our messages

The start of the research was founded in one of our ‘what if’ scenarios we start our discovery with.

We started with ‘What if my mobile would translate my facial expressions and body language into emotion cues expressed in emojis’.

Our analogy for this is: our expressed emotions are like the melody to the lyrics, being the information we’re exchanging in a conversation.

Basically we would interject the emotions (in the form of emojis) in the automatically transcribed voice message and thus give people that receive the information the means to interpret what you actually mean.

A doctor with super powers to better help patients

In her research Derisa also worked with BeterDichtbij to get feedback and connect with actual doctors. BeterDichtbij is a value-added service concept connecting patients with their caretakers. They started with a messaging service, putting patients direct in touch with their doctor, for example to exchange questions and research results.

Doctors and their patients might be the perfect group of people to ask if a better understanding of their communication might strengthen their relationship.

In a doctor-patient scenario a better understanding might be ‘seeing’ that a patient is confused, in pain, happy or sad. That emotion would be based on non-verbal/ textual information and provided by ways of a hint to the doctor, through emoji’s or even solid tips based on the information at hand.

The doctor would be able to provide the right care: not only an answer to the question, but first or additionally some feedback to the emotion.

Innovation is: Putting the research to the test

In Derisa’s research she used a couple of prototypes to gage the tendency of doctors to use the interpreted emotion map. This map showed either the emotion of the patient based on non-verbal cues. Specifically young doctors were keen on using it to learn to better communicate with their patients.

Another prototype even gave hints to the doctor to not only gage, but also parry the emotion and turn it to a chance to improve the situation of the patient.

These findings are just the tip of the iceberg of Derisa’s research. Make sure to read all her findings and research in her paper.

Prototype 1: Facial expression to Emoji

To put the theory to the test we connected Derisa with Martijn and build a facial expression detector in Unity. Unity is a well known game engine, that we also use to make augmented reality experiences (AR). With Unity Martijn made a test application that figures out where your face is and sends a picture of your face to a emotion detecting API.

Like Derisa’s research also points out: there are several base emotions and basically Martijn’s App boils your facial expression down to an emoji.

We thought this test is very promising. Although still very crude, in the near future recording facial expressions might help put a smile in our text messages and make us learn more about our ability to express ourself in more than one way.

The next step for this prototype is to ‘record’ the facial expression in a stream of emoji’s that offer context to the textual information.

Prototype 2: Read between the lines with ToneBot

Our next prototype was a bit more complicated. We want a realtime subtitle generator to also analyse the sentiment of the words we use while speaking. We think we can do that with voice to text services just released by Amazon and Google.

These services can transcribe what you say, but also dissect the sentences; just like you did in primary school. It makes us break down the sentences to meaningful building blocks like the subject of a sentence. This way we know what people are talking about. That leaves us with the emotions.

IBM’s Watson (yes, the Chess and Go playing AI) provided us with an ‘intent’ detecting service. Intent gives us the sentiment with which people talk about topics. We also get a degree of that sentiment.

In the demo below, we show how a conversation can be transcribed, broken down ànd analysed realtime by a robot. In our case we used discord to ‘play’ our transcript and our sentiment robot ‘Mr Tone’ provides us with a real time intent play by play.

Of course this is still very awkward and rudimentary, but the degree of accuracy we measured with MrTone gave us the confidence to further discover this technology.

Conclusion: designing with tech to become more human?

Above you read and saw the first signs of design and technology that might help us understand each other through digital means. We’re aware that -although cute and sometimes silly- these tests are still very much tests, but tests with that might brighten up our future understanding of digital communication, be it between people, with companies, or even our surroundings.

We found in our research, prototypes and innovations that technology can help us become closer and communicate more human. It sounds like a paradox, but where technology up until now has given us ease, speed and value at low cost, it seems it also has put a barrier between each other.

All apps, services and digital tools are personal, but are not promoting a personal and human way to connect. One might even endeavour to say that the social networks are not only to first serve the investor, or advertiser, but even makes our individualised digital world even more isolated.

At Mirabeau we think we should use empathetic design and new digital technology to break down the barriers and truly connect people. Relationships between patients and doctors, but also between people in general should go as unfiltered as possible, with the highest fidelity we can muster.

Our commitment therefore is to create new imaginative ways to leverage the power of technology to invisibly, but noticeably get people to understand eachother better, have meaningful and valuable relationships with each other.

Next steps: put empathetic design and innovation to work for your company

We already have a couple of projects going to further test and develop new ways to connect people and to enhance understanding. We think this is also the time for your company to join us. Companies like BeterDichtbij are leading the way to approach their market in a more empathetic way to create value, ease and more humanity and we believe could be the next wave of digital design.

Want to know more about understanding intent and natural language technology, want to learn how to apply human centered design in your service stack, want to discover new ways to leverage innovation in your company? Get in touch with Peet Sneekes (psneekes@mirabeau.nl).