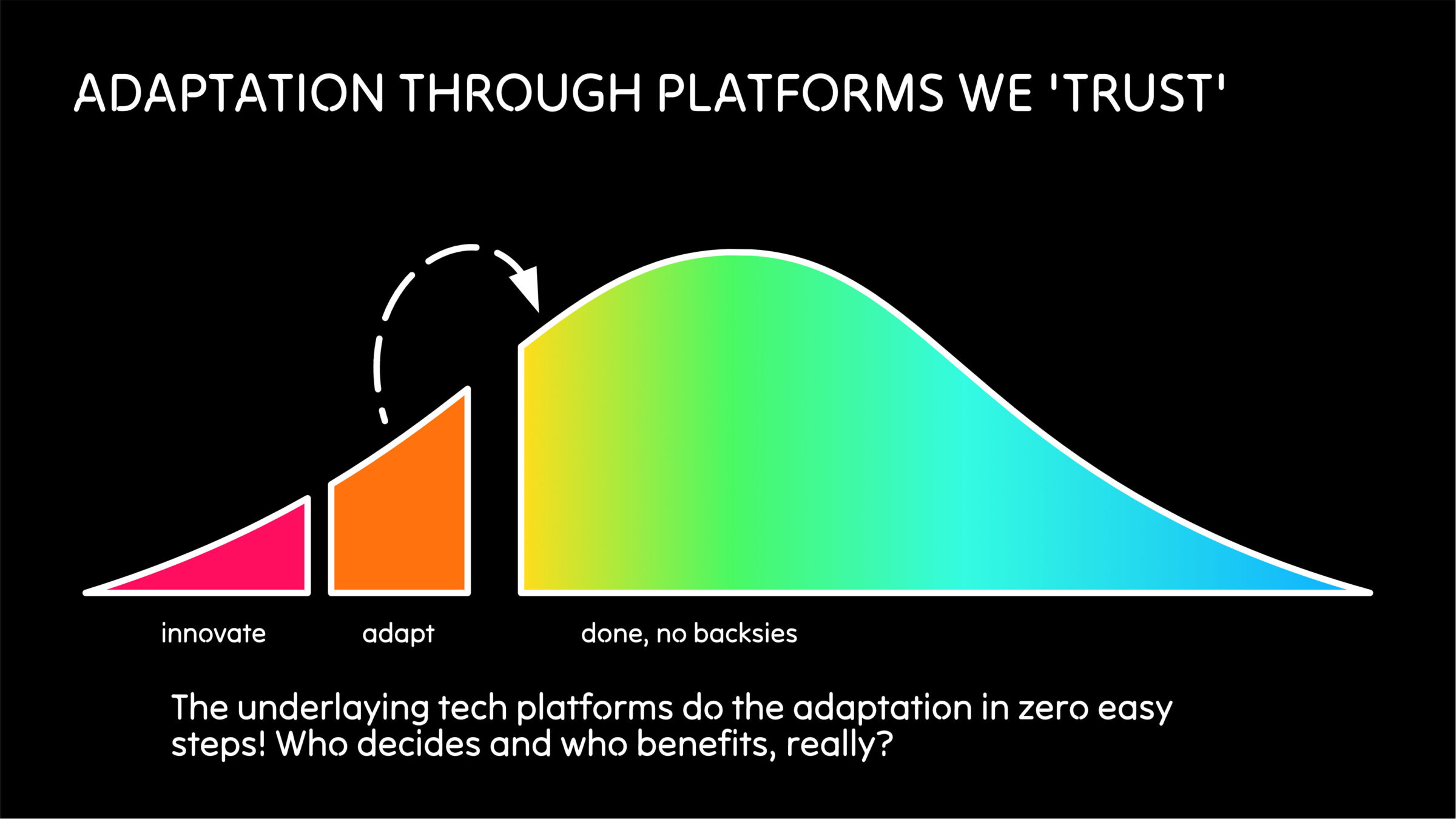

AI seems to have popped up in all our contracts with big companies like Microsoft, Adobe, Apple, Google. Like a trojan horse we are now confronted with the “same product, better taste” using AI, weather we like it or not, no matter the consequences. The way they introduced AI to us, changed the way we think of product adaptation for ever, or at the least should open our eyes on agency and choice.

This article is a continuation of our discussion held at Tech, What the Heck ,and our podcast It's Just A Model with Ron Kersic and myself.

This article is written with the premise that we are finding out a lot about AI. No matter its proliferation in various new and existing services, we (tech minded and people minded people) are still learning what works, what fails and what’s desirable. In particular, what strengths and weaknesses there might be in usability, safety, reliability and new use cases are both ignored and talked about everywhere.

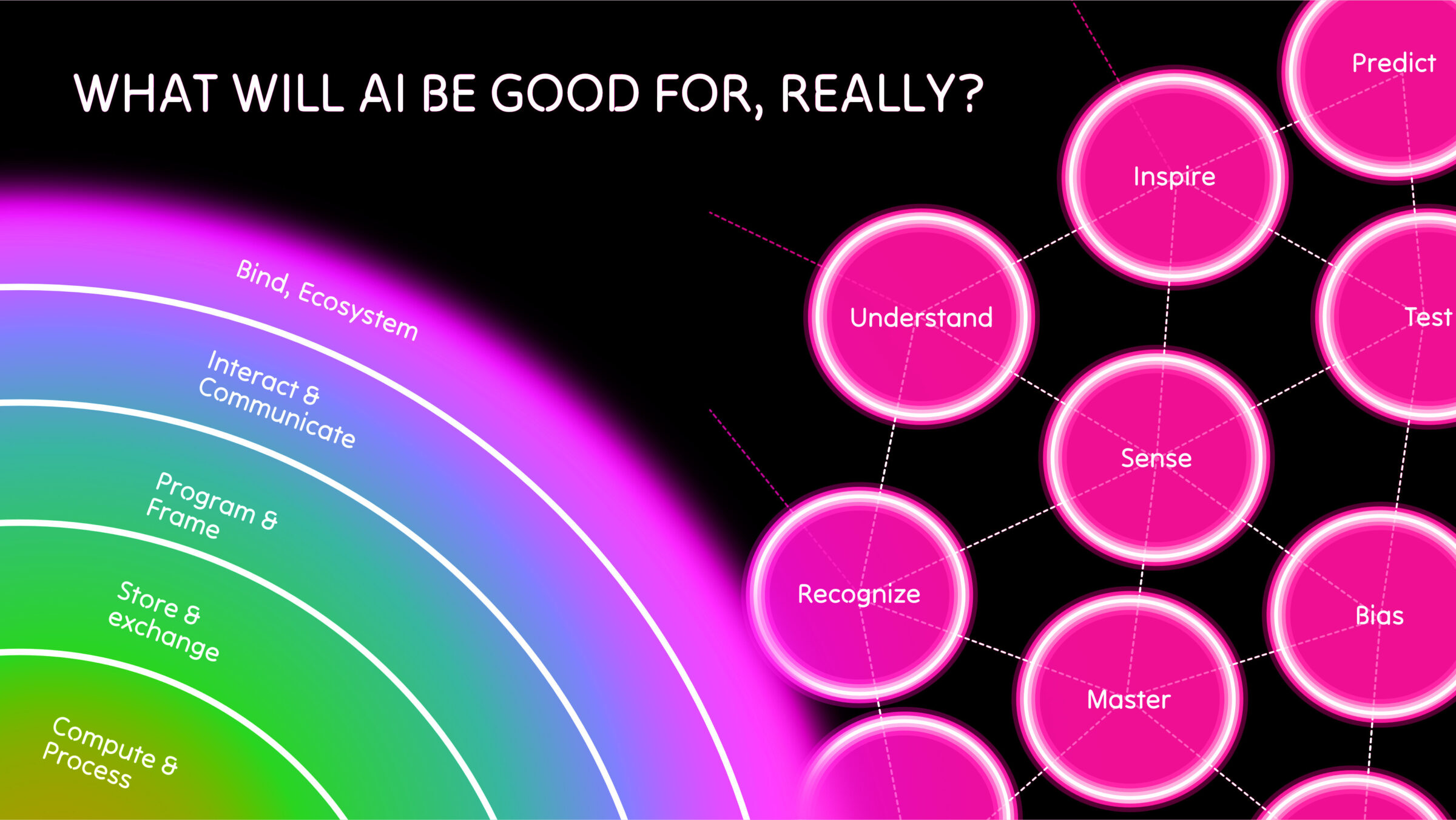

What does AI do in our products? How should we apply it? Where is it safe and invaluable?

AI does not show itself as a feature, or functionality, but as a magic, but fundamental new ingredient, or property. Where we would see digital technology as a part of a single layer of the tech-stack, AI seems to be applicable as property for most of all layers. AI offers new ways of seeing, saying, learning and inspiring from our compute layers with new chips that accelerate cognitive processes, to the interaction layer with faces, language and other empathic patterns.

The Troyan Adaptation

With AI as a new property that might enhance services and create opportunity for new features, it’s hard to see the impact of the introduction of AI. Sometimes it’s just marketing hype like many new products, technologies and companies are, but AI unwittingly might change the products and services fundamentally. Fundamentally as in: it needs more and different information to function (data, your data?), and fundamentally: the quality, expectations and safety changes, without us seeing it.

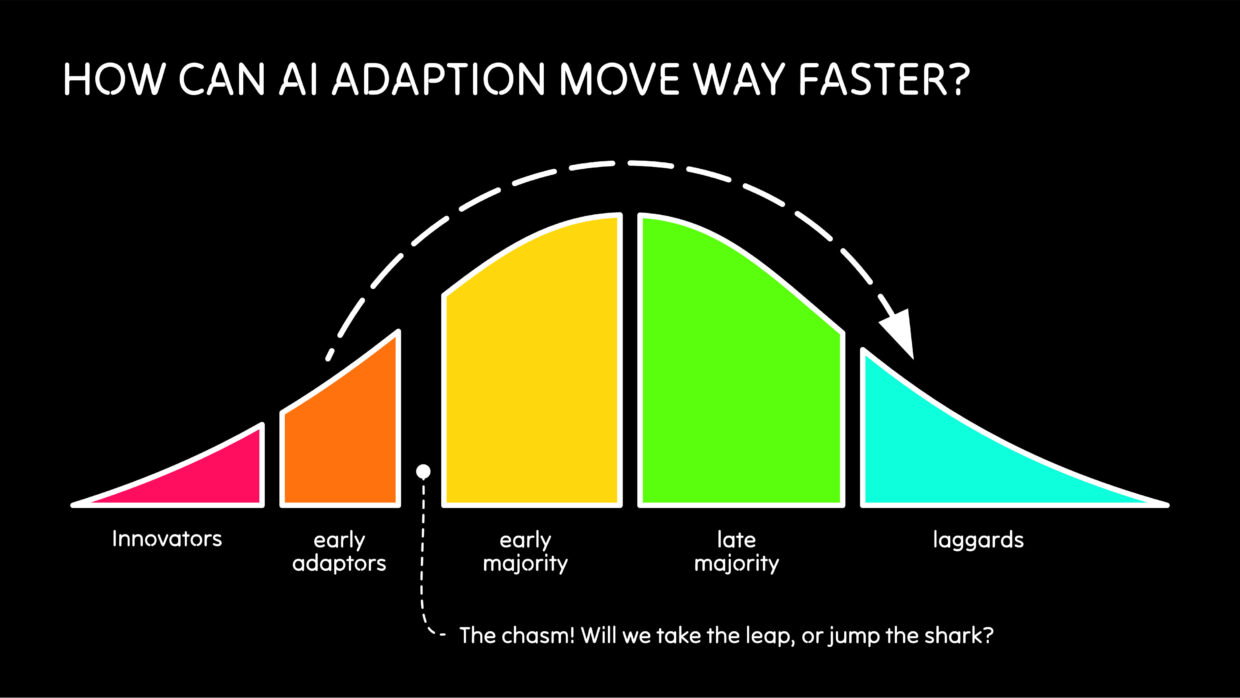

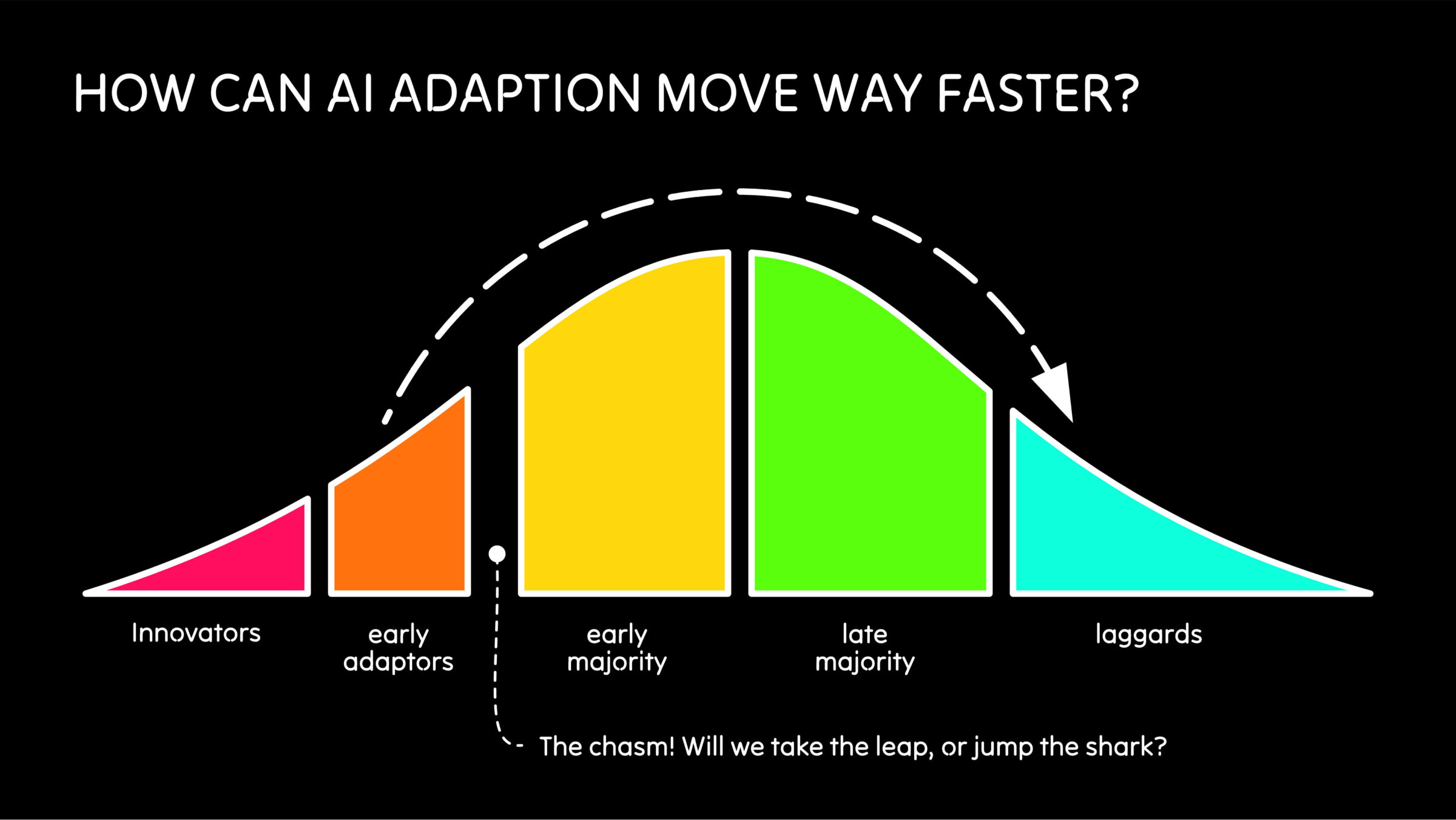

Because AI is not visible persé, the sneakily ‘upgrade’ probably needs more investigation, because is this change as harmless as it’s portrait to be, is it as revolutionary as it’s advertised to be, and who is benefitting here? Personally I’m concerned with AI skipping the line in our adaptation curve. This curve tells the story of people showing curiosity for a new piece of tech, product, or service, experimenting with it, promoting it to the populous and finally integrating it in the lives of everybody else.

There is a very cool and natural fail-safe in this model: the chasm. It’s the gap before the early adapters en masse get on board with a new product. It’s where we show doubt and even can cancel the entire curve by not adapting it. This is very healthy because we let a huge amount of people, in the role of critical customer/ stake holder ‘decide with their wallets’ whether a technology, product, or service will make it.

I’m concerned with AI skipping the line in our adaptation curve.

Big tech skips the line of adaptation

This time however, with AI, a lot of companies take the plunge for us! Like a Trojan horse AI’s now part of our lives and I guess we all now have to deal with possible consequences? No natural checks and balances, no logical use cases, no apparent value, something called ‘AI’ is just there, warts and all.

We have no say, not even afterwards because we are already bought into the various companies. Adobe, Microsoft, Google, Apple and many more less visible companies provide us with services that are not only convenient, but critical for running our businesses, families, house holds and more.

Now messaging, office services, workplace management, customer service, financial planning and logistical fulfilment have AI at the core, interface and other future spaces the big companies have not come up with. All under the same, or changed contract: using your data, untested quality, uninsured and opaque processes and blatantly changing the outcome and it’s properties.

Like a Trojan horse AI’s now part of our lives …

Little side step: I touch upon ‘uninsured’ processes. A good touch stone of the maturity of AI, and any other new technology in general is the tendency for insurance companies to provide coverage for said new technology. Most of the time, this relies on statistics around risks, behaviour and proliferation. You’ve seen this before with the introduction of Electrical Verhicles (EV’s) in our transportation landscape. People simply could not buy EV’s until a company committed to the possible risk and financial impact of EV’s in the world.

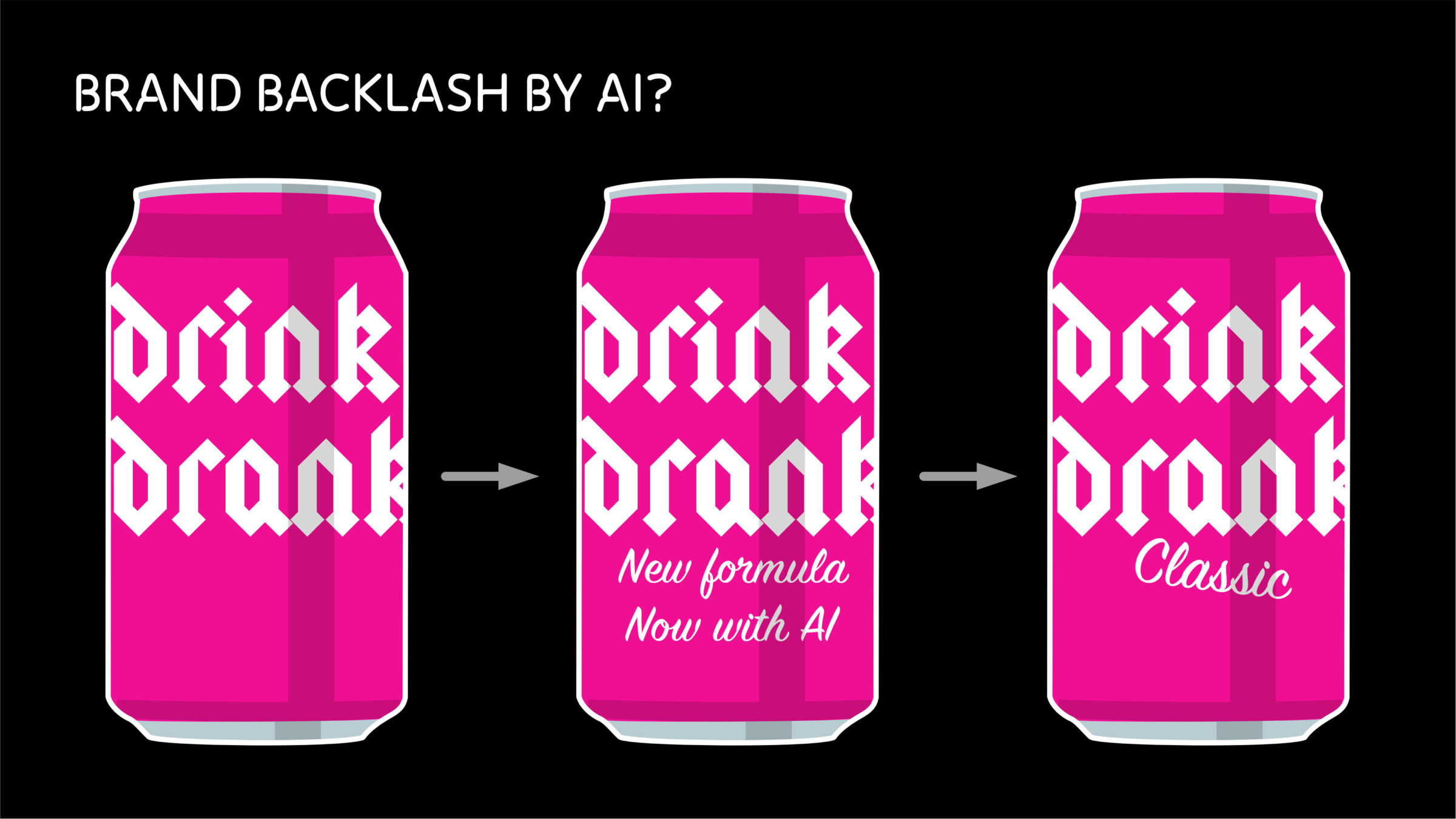

Brand backlash by premature and immature AI?

So do the big brands risk losing their credibility by adapting AI perhaps too early, before we make sense of it? Is this Trojan AI, skipping of adaptation phases, lagging legislative homework, research and backing of financial, behavioural and societal consequences and plain gut feeling a wise step?

We’ve seen all kinds of changes to successful brands and formula’s that were unnoticed, unwanted created outcry that made an brand, or even an industry backtrack their steps. I think of a famous beverage, 3D screens, tooth past brands making food stuffs and badly timed music business models.

These were brands that seemed to be motivated with ‘because we can’, or ‘because we can’t afford not to’ and of course to create new needs, cheaper production, etc. Funny enough, the adaptation of these new products, or changes in products eventually caught up with us, or were the basis of more refined new services.

Still, like the beverage company with its new recipe and more recently with the creativity productivity company, one sided and fundamental changing both the relationship and the service provided can result of serious and tangible, economical repercussions. I would involve more people in this process.

Finally, is AI too early, is AI holding us hostage?

AI is still very much in the ‘wonderment’ phase. We use it for everything in various degrees of success and doing various degrees of harm while doing it. It looks like this is par for the course from now on. However, as people in companies, government, families and other contexts we need to be vigilant.

Like the creative productivity company has experienced, silly and frivolous introduction of AI with a new contract is an adventure one should not take lightly. People do still have the power to cancel you and that relationship is hard to mend.

I’m a bit more careful in the less visible introductions of AI in products like productivity, fulfilment, customer service and financial services. These markets are often less crowded and mostly dominated by one or two behemoths that happily make use of that power to both experiment with and adapt new technology of their partners.

So, you’re mostly in control, but it is a power that needs a bit more practice. Trust, safety and insurance will be the key words in discussions on this topic.