Involving and listening to insurance experts might be the best route to quickly, safely integrate new tech into our lives without fuzz. The main problem of new technology is that people are still figuring it out, using it in ways it’s not meant to be used and unwittingly causing real damage. Here’s an anthropological perspective on A.I. in society and how we actually make it safe, rather than feeling a false sense of security by very general and toothless legislation.

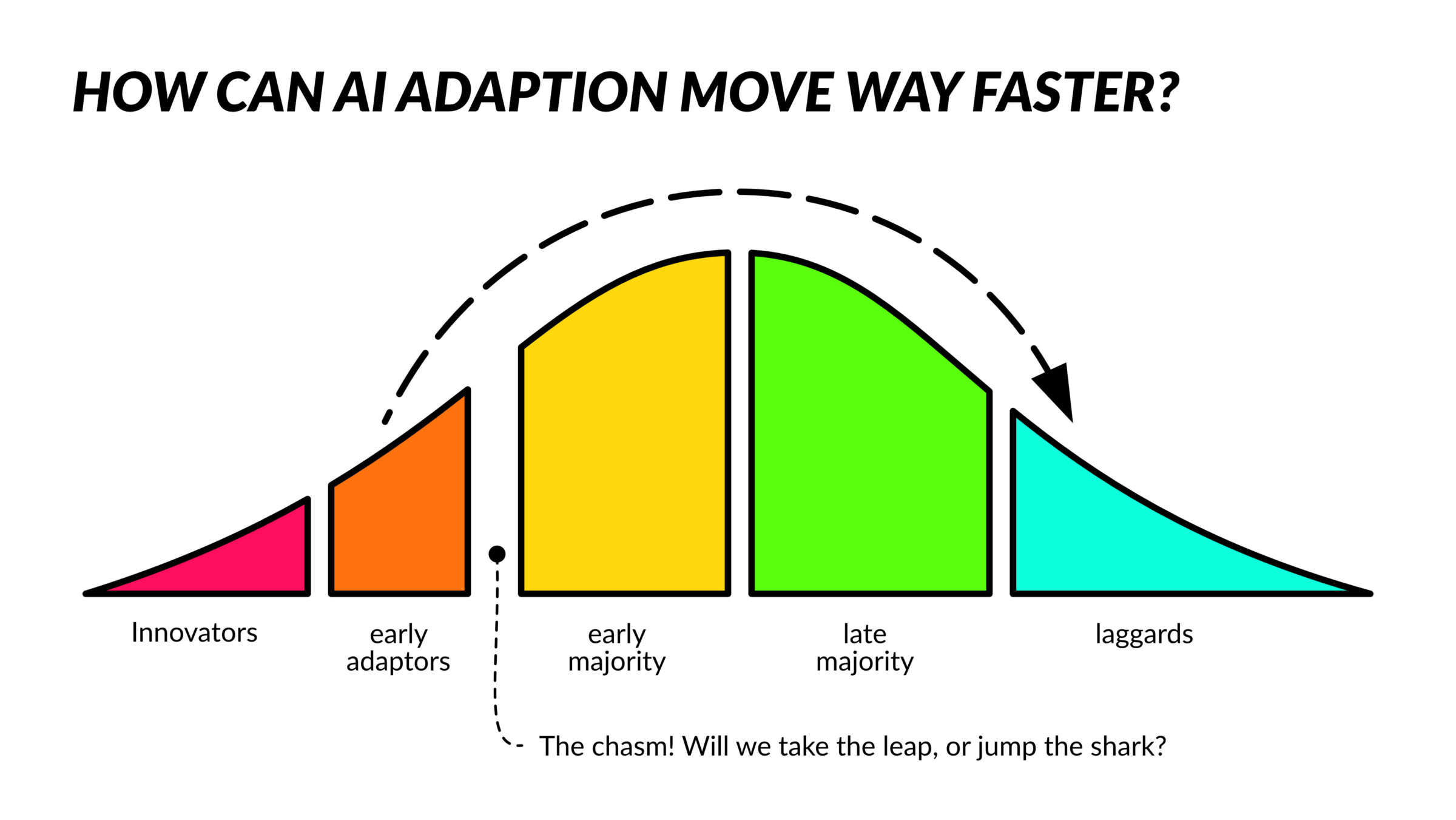

AI, just like digital, big data, web 2.0 and 3.0, crypto and machine learning all go through a common sentimental timeline of “it solves everything”, via “it needs legislation”, and “it ruins everything”, to “it’s useful, but cannot be trusted” and “we can use it with protection”. A.I. is at the station around: “it needs legislation”, we see the potential, realize we cannot oversee its impact and want to protect ourself. Legislation might work, but probably won’t before it’s too late.

Too early in the game for making rules

I just returned from a meeting of governmental people talking about integrating AI and specifically making sure it’s done safe and within the protective legislation around privacy and data sovernty. Basically they are writing the rules of AI usage without knowing most to even all possible usages. It seems both too late and premature at the same time.

It’s too early to make rules for using AI because like all people, most of us have limited creative capacity to dream, or have the most profound nightmares of things we have not experienced yet.

Our AI dreams are about a product, or service being autonomously helpful, like a car, a messaging robot, or an expertise feigning Cirano. And our nightmares will be basically the same, but destructive, sociopathic and callously lying. Those dreams and nightmares are fine, specifically for a topic that’s so complex and ethereal as AI, but using those dreams and nightmares to write the rules of its usage is nonsensical.

They skipped the hypecycle: forcing legislation

While I was surrounded by willing and in some cases able people yesterday, the rules and possible legislation will be too late. The current products and services most organisations, both public and private use are already laden, or supported by AI. That little writing assistant, advanced search and possibly some research tools too is just the AI we can see.

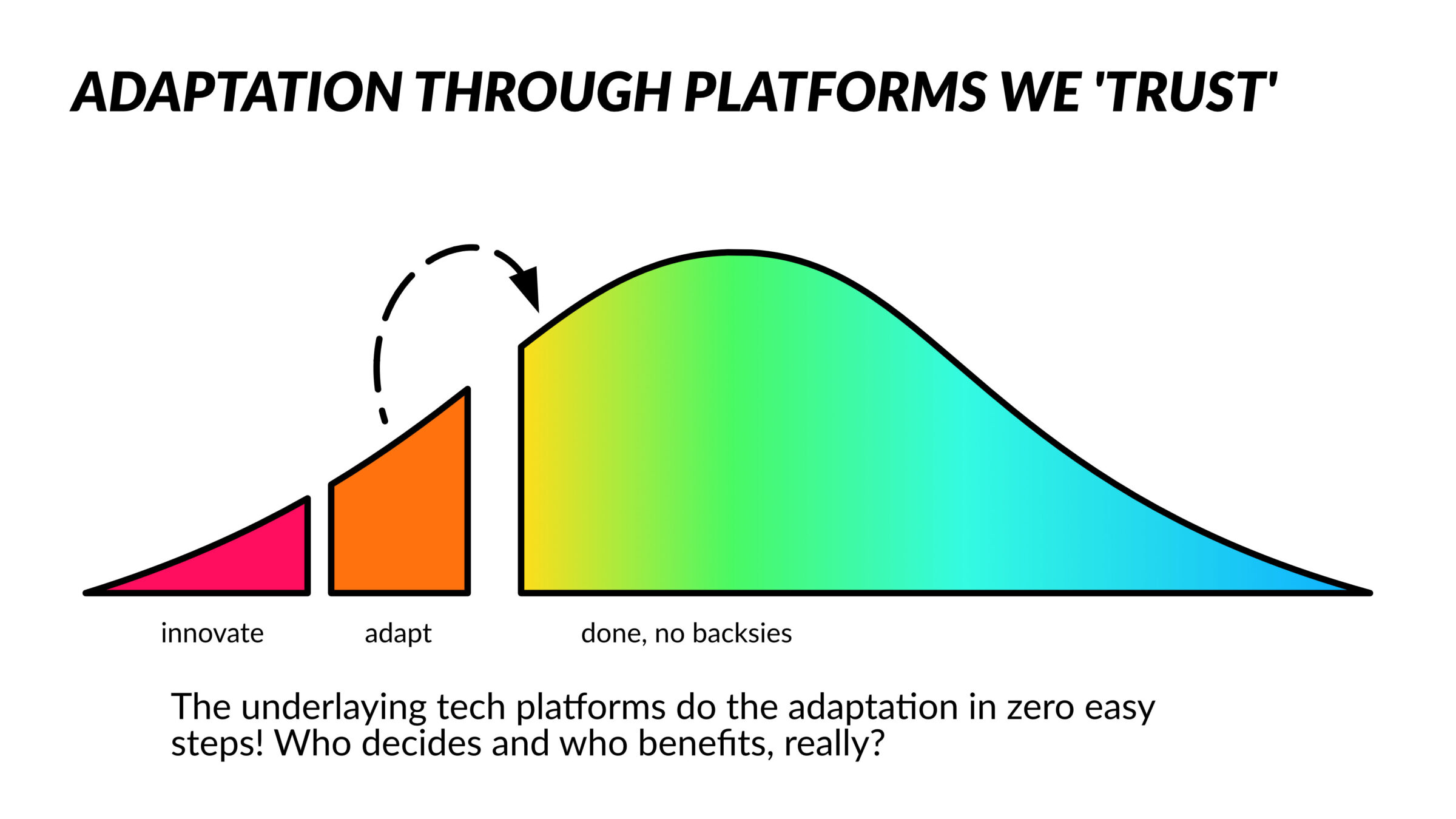

We didn’t ask for AI in Word, but because we are all customers of the same company and it has the need to seem innovative in its space, we got it AND we’re paying for it. The big five companies have skipped the hypecycle for us and integrated it without consent.

The only thing we can do against this unexpected encroachment of our sense of choice is say: no, stop this, we want our ‘old’ AI-less service. And because maintaining two or more versions of a service is a strain on any company, we got push back. Products without AI will become more expensive.

Industries and companies have aimed their powerful lobby industry at us and flooded the EU with all kinds of AI-evangelists. That’s why we are in the process of writing legislation, to change the one thing we control: our constitutional compatibility with AI products and services. We’re going on about privacy, safety, ownership and strategic (digital) sovereignty.

Answering the unanswerable question with money

Peoples finding themselves overwhelmed by technological revolutions, or things that are touted as such, is not new. In most recent times we have had battery-powered vehicles. A new way to power well known concepts (cars). As the populace was fretting about performance and range of EV’s, most governments were blocking its certification because of the vast amounts of uncertainties like safety, infrastructural impact and its following economical impact.

The core argument was: we cannot accept an uninsured car and these cars cannot be insured because we have no long term data to certify them, nor the knowledge to come up with the possible certifications needed to be insured. That phrasing was perhaps the biggest mistake governments made: putting insurability first.

The insurance industry agreed, without data, without a well informed legislative partner, no insurance on EV’s could be granted. The amount of risk was simply too high. And then there was Tesla with a brilliant move: they leveraged their vast capital to create an insurance company that covered the risks of driving an EV and subsequently a ‘self driving’ car. The Teslas were insured, certified because of it and fast laned through legislative problems.

Basically Tesla answered the unanswerable questions of risk with a huge pile of money. Environmental impact, safety and creating new standards to mitigate such concerns were created in the years after their insurance. At the same time Tesla was making bank with an insurable temporary monopoly. Soon the rest of the industry and the rest of the insurance companies were forced to lock step to break said monopoly.

Unclear and opaque AI is uninsurable

So should OpenAI, Microsoft, Google, X, Amazon, Oracle and other AI companies follow Tesla’s example? Well, that depends. If markets continue to deflate, I think there’s no money in the world to cover risks in using AI in any context. The insatiable need for power and chips seem to consume most capital at a foolhardy clip at the moment. Even with national support it seems unsustainable. But AI has a bigger challenge, I mentioned before.

The real challenge for AI is that it’s too nebulous of a topic. We have seen implementations of AI like LLM in the format of a chatbot and productivity services, but these are -to me- quite feeble and superficial examples of its power. Like I’ve mentioned before: we -as a people- incapable to dream and have nightmares of an unrelatable topic. That means we cannot forsee possible future applications of AI, possible new incarnations of AI services (like AGI, AI Agents and more), we cannot forsee any risks of said services and cannot make rules that cover said risks.

Having said that: we can choose and discover which AI services are insurable! We can collect data of the applications and usage of specific services, and ask the big tech companies to have them put their money where their mouth is. This will also lead to reasonable certifications to what is happening in the AI, what things we can expect of it and what things it cannot, or may not do.

Concluding: insurance brings specificity, clarity, usability and accountability

Putting AI applications through the ringer like we have done for many safety concerns on products and services in our society will help us with three main considerations that we need to accept a specific AI application in services and products:

- Clarity: we need to know what and IA contributes to the product and service. We need to know how it might be safeguarded to usage outside of its use case. We need to know possible damage and risks it can and may cause in the duration of its use and afterwards.

- Usability: through clarity its users also need to know what to expect as an output, performance improvement and also what is not in scope of its capabilities. That will increase usability. For example: people again and again ask for truthful and accurate output of common LLM chatbots. How mathematically probable the output of LLM’s are, we cannot hold them accountable, nor should we use them to create unbiased and accurate information.

- Accountability: the use cases where we do find AI usable, safe and indeed reliable should go in its accountability contract. One could choose for certification, strengthened by legislation, oversight and enforcement, but I think a reasonable insurance on a specific set of AI applications would be the final outcome.

There is of course a caveat: people don’t really trust insurance companies. They have shown that their inherent need to control and embiggen their bottomline is their main concern. This has led to a wide variety of legalese, loopholes and small letters we apparently have accepted after signing the insurance contract.

That’s why I mentioned: an insurance-like mechanism, by which I mean: a fact, data and statistically based financial product that covers all, or most current and future costs of the ownership/ usage of a service, or product enhanced by AI.

Finally

All that waiting for data, asking for proof and testing of products might hamper innovation. That’s the biggest concern whipped up by companies in both the EU and the USA against all implemented and upcoming legislation.

I beg to differ: it hampers behaviour and properties of products and services we have tolerated too long. The point of these legislation is to create and maintain the society we like to live in. Are the current sets of legislative programs a bit overbearing and perhaps even behind the times? Perhaps!

I do hope and wish that the effort of any legislation in the EU and the USA will evolve through time, empowering innovation and a safe society, insured against known risks, rather than fantasy.