Your AI might be delusional and its probably done by hackers, confusion patterns and musicians? Your Agentic AI could make important descisions on data that was poisoned at the well and could break the reliability boundaries AI companies have set for them. What if I told you these poisoned models cannot be detected, until you start using it.

AI models, the core of the AI understanding capability, can be poisoned! With poisoning I mean: have the AI see the world in a different way, or even see a different world all together. This is the conclusion of Benn Jordan, a musician with a very broad interest, including how AI works, specifically how music generating AI works. He found a way to have music generating models hear different things while listening to music. To us it sounds like one thing, to the AI like something unlike music all together.

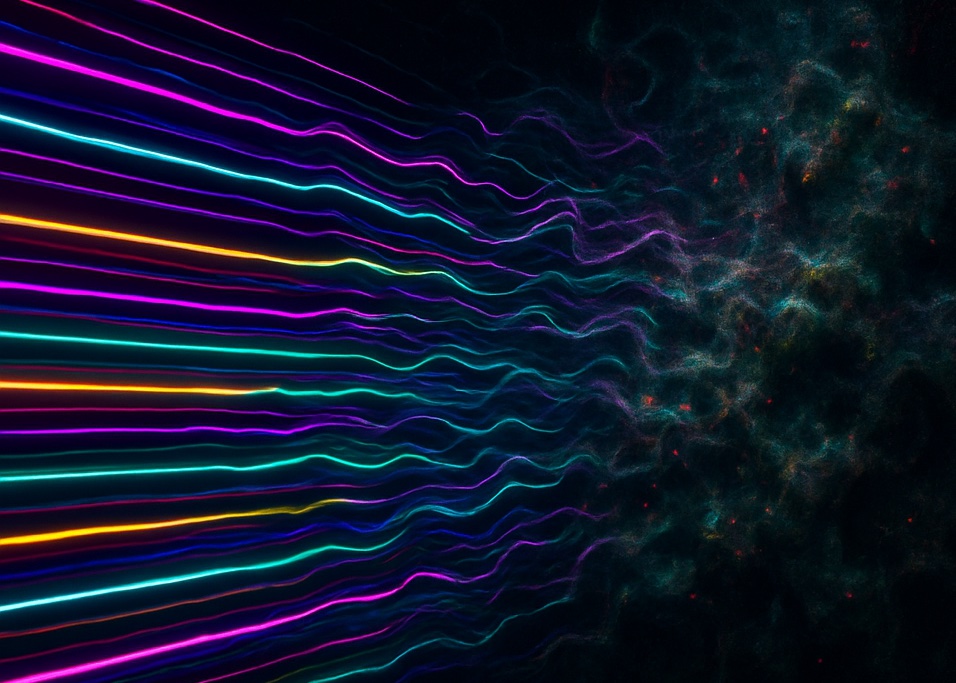

Confusing the AI

AI seems to want to convince us that it can listen to you, understand you and see the world like you do. To do so, it tries to replicate our words, sounds, images and music based on the patterns it ‘reads’, ‘sees’ and ‘hears’. But AI does not ‘hear’ things, it sees data, data that has patterns and these patterns are expected to be predictible, so they can be replicated. In music, this is a problem, and please check out Benn’s video, he can explain this part of the story way better than I do.

In short he found a way to create music files that sounds great for people, but sounds way different for software that trains a model. This results in the inability of the AI to repreduce the music and instead creates some atonal weird gobbledigook. The music he creates have been confused, or as he puts it ‘poisoned’ with patterns that only an AI can hear, and it inaudible for people.